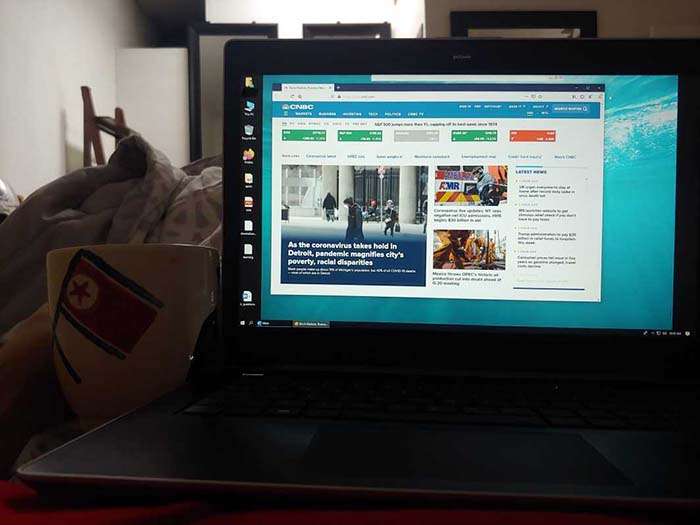

Another week of quarantined

programming, video games, and hitting f5 on economic news.

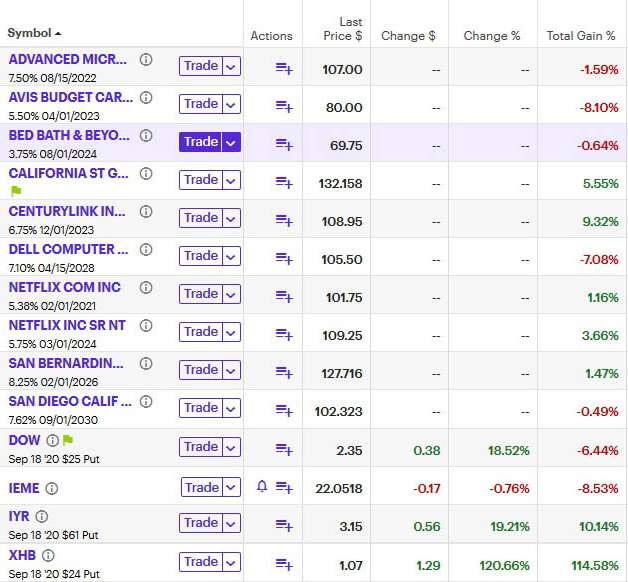

Investments

The

recent peak was a chance to sell most of my "is this a dip?" stock and ETF holdings for a modest profit. The glaring exception is oil, which is now both a headline and a punchline.

Oh well, it'll be back. This is what I get for not being a conscientious investor. Speaking of long term investments, bonds are fun and low maintenance. For the uninitiated,

here's everything I know about bonds, using etrade's terrible ui as a visual guide:

At least with etrade as a broker, you're looking at $1000-ish increments of investment. The

bonds themselves have a market value independent from their face value against which interest/coupon is paid. It seems like selling bonds requires advertising that you're willing to sell and letting the broker match you up with a buyer. I put out a sell feeler to see if I could make a tidy 10% on one investment and never heard back.

So scrolling up, for whatever reason people want to buy a 6.75% Centurylink bond but not so much a 7.10% Dell bond. I presume this is come combination of return (coupon/maturity) and risk - I stuck with companies I expected to remain in business (or acquired) through these troubling times. Anyway, they're better than 0.0000000001% interest or (in my opinion) even cash. That said, if the bond market were to tank you could be stuck with each holding until maturity. So interleaving maturity and having other investments is important.

Options

I'm squeamish about calls in this weird pumped market. I haven't dedicated the hours to work out some actual options trading strategies; without a copilot I'm convinced I'm going to do something wrong as soon as I add a leg. Still,

a few puts here and there gives me something to f5:

- DOW 09/18 $25p - SPY premiums are high right now and the bailouts seem directed at S&P companies, so I wanted to avoid those anyway. I should have gone with DIA or something more actively traded. Barcharts was a pretty good guide.

- IYR 09/18 $61p - based on the simple idea that the normal real estate cycle combined with unemployment combined with other economic impacts will hit real estate to an extent that isn't yet priced in.

- XHB 09/18 $24p - same deal but with homebuilding, including the fallout of idle time during quarantine.

If nothing else, the unlinked interplay between indexes and headlines has been funny to watch. But the 2020 news just keeps coming:

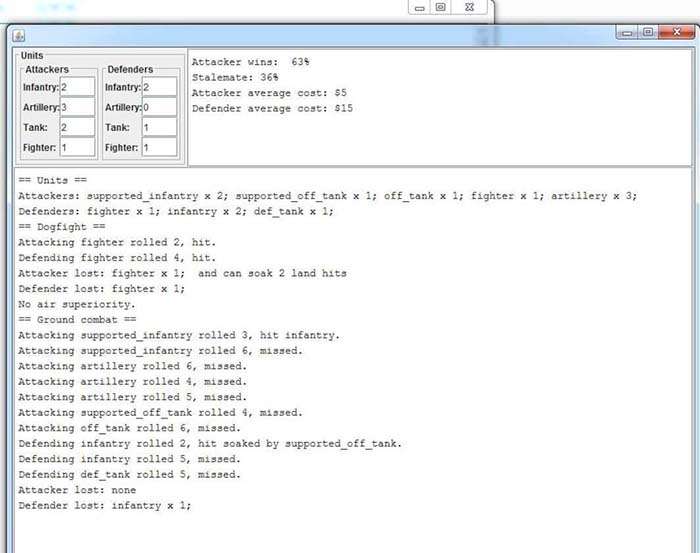

Axis & Allies, World War... 1(?)

In addition to a few concurrent

Wargear matches,

Jon is

remotely hosting Axis and Allies: 1914. The game is well-suited to tele-wargaming; it's long and deliberate and requires very little interaction from anyone but the attacker and dice roller.

The game itself is an interesting departure from the WWII editions, but maintains most of the core elements.

Same:

- Dice mechanics

- Turn stages/purchases

- Movement, for the most part

- Submerging submarines, battleship bombardment, reduced-range fighters

Different:

- Board, obviously, the territory is very different from the standard WWII layout

- To simulate trench warfare, attackers/defenders only roll once, so countries end up 'contested'

- Fighters claim air superiority and promote artillery

- Tanks and the United States only come into play round four, attacking tanks soak a point of damage for free

The combat was different enough that I had to modify my Anniversary Edition simulator to do tench warfare.

Memes and more

The Zoom era is upon us, I've been in chats, movie nights, and even played a revival of the 90s classic You Don't Know Jack. Other chat media is still around, of course.

I finally gave in and watched Rise of the Skywalker. I guess the good part was all the nostalgia and tearjerkers for movies that weren't this one - deceased characters, Luke's X-Wing, even Liam Neeson('s voice). The bad parts were - well - the plot is just a ho-hum scavenger hunt, the finale was written on the wall from the start, and it really didn't need a heavy-handed "

well the moral of the story is that friendships win wars against impossible odds". Oh and some time bending on par with GoT season seven.

Keras

Site meta

So I've steadily

built a code base for a variety of things to include this site. The project has included things like auto-thumbnailing and tag generation. My post-to-post workflow has been:

1. Dump media into a directory

2. Run a script that autogenerates a starter markdown file based on those images

3. Run a COTS tool to resize and compress graphics, once for screenshots and once for photos

4. Author the post

5. Run the markdown parser/page generator

Combining 2 and 3 wasn't too difficult once I

went through the legwork of looking up how to set jpg compression level. I took the opportunity to implement a border crop feature that was useful for removing some black bars from recent SotC screenshots. The final piece was automating the image resize policy. For the moment, it's based on hard-coded known sizes (e.g. 1920x1080 or a D700 sensor).

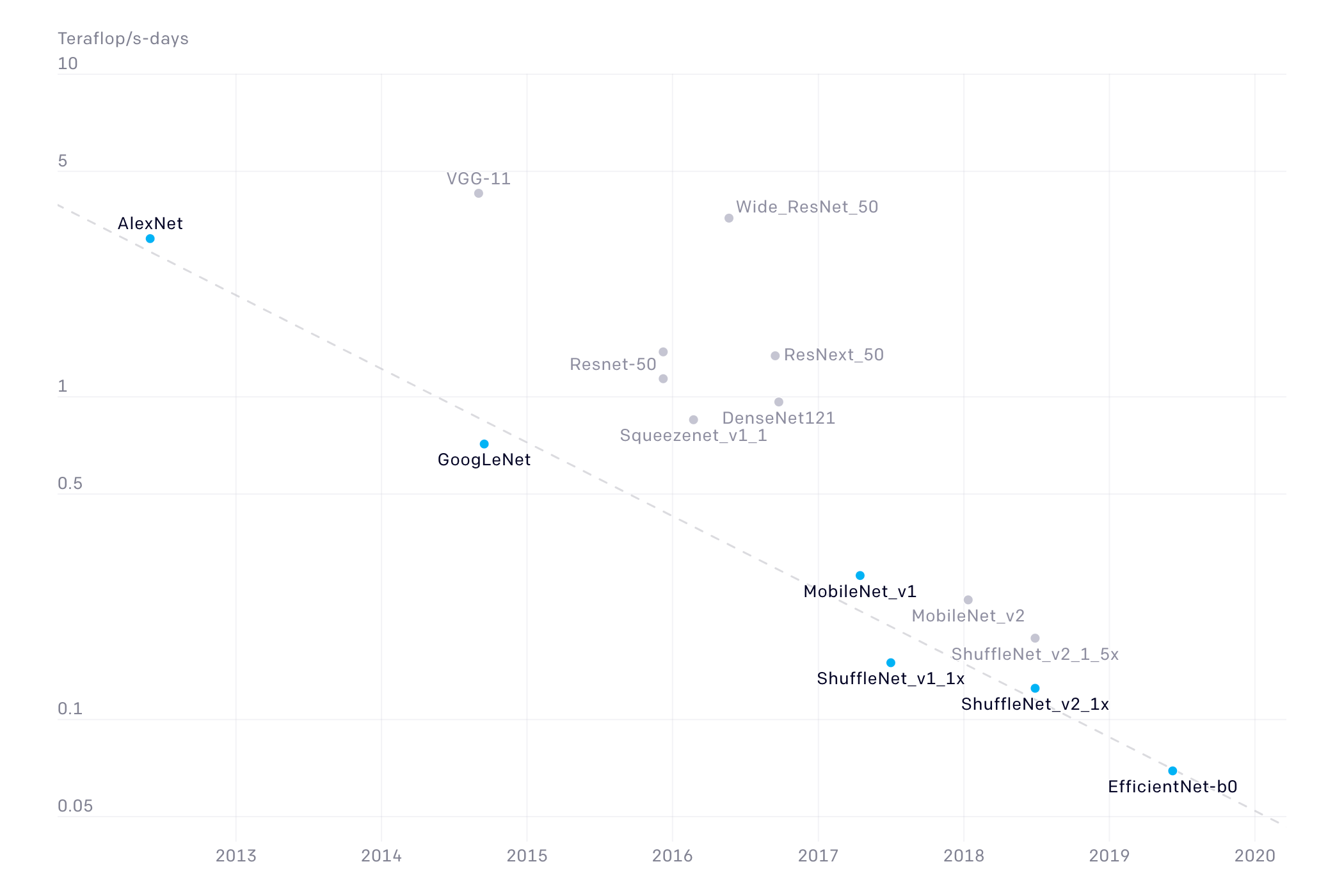

Classifying images as photos (resize) or screenshots (resize if > 1920x1080) seemed like a pretty standard application of Keras.

A brief sidetrack: Python and Keras suck

Well, not exactly. They're awesome and the best tool for the job, but

it's agonizing that no one in the Python community can make interoperability work. This is mostly a Keras/Tensorflow/PyTorch experience, and those admittedly are a more recent thing (but not *that* recent). In a more general sense, you need look no farther than the global agony of moving to Python 3 to see the impact of undercooked technologies.

Back to machine learning, after many, many hours of searching on every new warning or error, I found my golden combination of ML-related software:

imageio 2.6.1

Keras 2.3.1

Keras-Applications 1.0.8

Keras-Preprocessing 1.1.0

numpy 1.18.1

nvidia-ml-py3 7.352.0

opencv-python 4.1.2.30

pandas 1.0.3

Pillow 7.0.0

scikit-image 0.16.2

scikit-learn 0.22.1

scikit-umfpack 0.3.2

scipy 1.4.1

tensorboard 2.1.1

tensorflow 2.1.0

tensorflow-addons 0.8.3

tensorflow-datasets 2.1.0

tensorflow-estimator 2.1.0

tensorflow-gpu 2.1.0

tensorflow-gpu-estimator 2.1.0

tensorflow-metadata 0.21.1

tensorlayer 2.2.1

tf-slim 1.0

torch 1.4.0

torchvision 0.5.0

... all using Cuda 10.1 (not 10.2, that does not work). This worked for my existing code base and is reasonably up to date, so, lock it in.

With that settled, I downloaded about two dozen Keras/TF/Torch projects from Github, hoping to just run their example code. Ticking them off one-by-one, they all fell to one or more of the following:

- Python 2.x

- Linux only (okay, that's on me)

- Keras 1.x, sometimes even more restrictive

- Tensorflow 1.x, "

- Something wrong with the project itself, because they're mostly grad students mashing together a poorly-written adaptation of some published algorithm

I've spent hours upon hours slowly creeping toward a setup that works with my hardware and software,

reverting to a previous version is not something I want to do because everything else is going to have to change. A lot of these issues came back to Keras removing certain features that everyone used for 1.x projects. They're not superficial changes and the project authors have largely left their projects to be abandonware.

But

what about Docker?!? Nope, nope, nope. Not even considering this in a Windows environment trying to hook a web of interdependent machine learning libraries into a Nvidia/.NET framework that provides graphics acceleration.

So what was this rant?

I don't know, think harder about your API. Deprecated stuff can still function, so leave it in. When you shuffle stuff to supporting libraries, they shouldn't only be available on someone's personal web site. Build software for version x or newer.

About that classifier

Ah yes, so I'd been working with a 256x256 classifier on sizeable /photo and /screenshots libraries. It does some

convolutions and maxpools down to a reasonable 32x32 before flattening for classification to conceptually two classes (photo/screencap). It trained quickly and learned the training data with high accuracy.

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv_256w_8sz_x16 (Conv2D) (None, 256, 256, 16) 3088

_________________________________________________________________

conv_256w_8sz_x12 (Conv2D) (None, 256, 256, 12) 12300

_________________________________________________________________

conv_256w_4sz_x12 (Conv2D) (None, 256, 256, 12) 2316

_________________________________________________________________

dropout_256w_0.1 (Dropout) (None, 256, 256, 12) 0

_________________________________________________________________

maxpool_256w_x2 (MaxPooling2 (None, 128, 128, 12) 0

_________________________________________________________________

conv_128.0w_4sz_x12 (Conv2D) (None, 128, 128, 12) 2316

_________________________________________________________________

conv_128.0w_2sz_x12 (Conv2D) (None, 128, 128, 12) 588

_________________________________________________________________

dropout_128.0w_0.1 (Dropout) (None, 128, 128, 12) 0

_________________________________________________________________

maxpool_128.0w_x2 (MaxPoolin (None, 64, 64, 12) 0

_________________________________________________________________

conv_64.0w_4sz_x12 (Conv2D) (None, 64, 64, 12) 2316

_________________________________________________________________

conv_64.0w_2sz_x12 (Conv2D) (None, 64, 64, 12) 588

_________________________________________________________________

dropout_64.0w_0.1 (Dropout) (None, 64, 64, 12) 0

_________________________________________________________________

maxpool_64.0w_x2 (MaxPooling (None, 32, 32, 12) 0

_________________________________________________________________

flatten (Flatten) (None, 12288) 0

_________________________________________________________________

output_x4 (Dense) (None, 4) 49156

=================================================================

Total params: 72,668

Trainable params: 72,668

Non-trainable params: 0

_________________________________________________________________

Validation accuracy

flattened around 60-70% though. I turned some knobs:

- Removed and re-added higher dropout rates.

- Randomly scaled inputs to some reasonable factor between 256 and the original size, then sampled patches of them.

- The normal translation/mirroring/noise stuff.

I was going to dig into hyperparameters and retry with batchnorm, but diverted to a new model based on the idea that a

seven convolutional layers is getting toward the feature recognition domain, and this isn't really what I want. I'm looking to characterize input based, perhaps, more on gradients than edges (maxpool bad?). I don't want the model to learn that human faces are generally photos and zombie faces are generally video games. I want it to see the grit of a photo, the depth of field of an SLR photo, the smooth rendering of a screenshot, or even its pixelation for older games.

Maybe a deep network does that anyway, just throw training at it. But it was worth

experimenting with a shallower network.

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv_256w_8sz_x16 (Conv2D) (None, 86, 86, 128) 3584

_________________________________________________________________

batch_normalization (BatchNo (None, 86, 86, 128) 512

_________________________________________________________________

gaussian_noise (GaussianNois (None, 86, 86, 128) 0

_________________________________________________________________

conv2d (Conv2D) (None, 86, 86, 32) 4128

_________________________________________________________________

batch_normalization_1 (Batch (None, 86, 86, 32) 128

_________________________________________________________________

dropout (Dropout) (None, 86, 86, 32) 0

_________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 21, 21, 32) 0

_________________________________________________________________

flatten (Flatten) (None, 14112) 0

_________________________________________________________________

dropout_1 (Dropout) (None, 14112) 0

_________________________________________________________________

dense (Dense) (None, 64) 903232

_________________________________________________________________

batch_normalization_2 (Batch (None, 64) 256

_________________________________________________________________

dropout_2 (Dropout) (None, 64) 0

_________________________________________________________________

output_x4 (Dense) (None, 4) 260

=================================================================

Total params: 912,100

Trainable params: 911,652

Non-trainable params: 448

_________________________________________________________________

Of course I turned a bunch of knobs (because wheeeeeee!). Strided input and a 4x max pool to get the many convolutional kernels down to a reasonable dense layer. Results: 60-70% accuracy. Blast.

Divinity

Mine and J's Divinity 2 characters finally departed Fort Joy. Fane has a neat shapeshifter helmet so he can blend in with all the lizardfolk.

The Red Prince does the tanking...

...

I mostly just cast blood rain whenever it's up. Particularly out of combat.

Anyway, the game is really good.

Guns, Love, and Tentacles mop up

Before jumping back into Divinity, we

finished up all of the GL&T side quests.

Could have used a raid boss, but I'll settle for the Nibblenomicon.

Some posts from this site with similar content.

(and some select mainstream web). I haven't personally looked at them or checked them for quality, decency, or sanity. None of these links are promoted, sponsored, or affiliated with this site. For more information, see

.