There has been

a flurry of interesting plotlines surrounding the past, present, and future of the internet; implosions at

Reddit and

Twitter, Facebook's Twitter clone, and the impact of AI on web search. Simultaneously I have read up on more parochial web matters - the

indieweb,

blogging, and

SEO.

Here's a post about all of them, because they're all intertwined.

Let's start with esteemed sociological discussion since later we will wade into greentexts and "Fuck Spez" graffiti. Hacker News pointed me to

an editorial from 2021 discussing the modern incarnations of analog-era countercultural movements. It was worth the read, though comparing Boyd Rice to Tekashi 6ix9ine was a bit outside my sociological wheelhouse. More relevantly (to me), the author discusses the impact of media platforms on modern movements, how even rebellious speech silently (or overtly) carries commercial branding. Her discussion of the oldweb and modern platforms featured a few familiar themes:

Caroline Busta

Caroline Busta |

As online activity began to centralize around search engines, such as Netscape, Explorer, and Google, in the late-'90s and early-'00s, the internet bifurcated into what became known as the "clearnet," which includes all publicly indexed sites (i.e., big social media, commercial platforms, and anything crawled by major search engines) and the "darknet" or "deep web," which is not publicly indexed.

There were also a number of sites that though officially clearnet, laid the groundwork for a sub-clearnet space that we might think of as a "dark forest" zone-particularly message board forums like Reddit and 4chan, where users can interact without revealing their IRL identity or have this activity impact their real-name SEO.

|

Hey, the dark forest again. I read something about that

last year:

Ideaspace

Ideaspace |

In response to the ads, the tracking, the trolling, the hype, and other predatory behaviors, we're retreating to our dark forests of the internet, and away from the mainstream.

Dark forests like newsletters and podcasts are growing areas of activity. As are other dark forests, like Slack channels, private Instagrams, invite-only message boards, text groups, Snapchat, WeChat, and on and on. This is where Facebook is pivoting with Groups (and trying to redefine what the word "privacy" means in the process).

|

Busta echoes those same sentiments and I'm now wondering where this analogy was first used.

Interestingly,

LLMs may have flipped the script on the 'sub-clearnet' being, well, preferable to platforms that require PII. The influx of AIs and script-assisted astroturfers may soon drive users away from platforms where they could simply be interacting with a marketing bot. Suddenly Zuckerberg demanding your driver's license and a genetic sample is a small price to pay for authentic conversation. And that's why Elon wanted to fight him. Or something.

Caroline Busta

Caroline Busta |

Taken from the title of Chinese sci-fi writer Liu Cixin's 2008 book, "the dark forest" region of the web is becoming increasingly important as a space of online communication for users of all ages and political persuasions. In part, this is because it is less sociologically stressful than the clearnet zone, where one is subject to peer, employer, and state exposure... One forages for content or shares in what others in the community have retrieved rather than accepting whatever the platform algorithms happen to match to your data profile. Additionally, dark forest spaces are both minimally and straightforwardly commercial. There is typically a small charge for entry, but once you are in, you are free to act and speak without the platform nudging your behavior or extracting further value... [Using these platforms] is therefore not analogous to legacy countercultural notions of going off-grid or "dropping out."

|

I'm not sure it has to be either 4chan or TikTok.

Recommendation engines and visibility algorithms can be really nice to have. But we seem to be moving toward an internet where the price of entry for content recommendations is all of your personal data and a high tolerance for embedded advertisements.

At least until

Rob implements

my (likely not original) idea to save the internet.

Caroline Busta

Caroline Busta |

To be sure, none of these spaces are pure, and users are just as vulnerable to echo chambers and radicalization in the dark forest as on pop-stack social media. But in terms of engendering more or less counter-hegemonic potential, the dark forest is more promising because of its relative autonomy from clearnet physics (the gravity, velocity, and traction of content when subject to x algorithm). Unlike influencers and "blue checks," who rely on clearnet recognition for income, status, and even self-worth, dark forest dwellers build their primary communities out of clearnet range-or offline in actual forests, parks, and gardens... The crux of Liu Cixin's book is the creed, when called by the clearnet: "Do not answer! Do not answer!! Do not answer!!! But if you do answer, the source will be located right away. Your planet will be invaded. Your world will be conquered."

|

Side note to any hostile aliens: my domain name is a pseudonym.

Fear and loathing in the sub-clearnet

Inside Higher Ed

Inside Higher Ed |

"EJMR is currently melting down with people convinced their careers are in danger, presumably because they've said some very nasty and/or stupid things in locations that will easily identify them," tweeted Ben Harrell, an assistant professor of economics at Trinity University, in Texas. "In the end, nothing of value will be lost."

|

The synopsis:

- There's a 4chanlike(?) online forum for economists.

- It's anonymous(-ish) and therefore has some measure of unprofessional behavior beyond what'd you'd expect from economic discussion.

- Posts apparently have a hash code that uses the poster's IP address. Somebody reversed the hashing algorithm and published an academic paper revealing the source IP of offensive posts.

- Many of the IP addresses are associated with universities, indicating the forum isn't all basement dwellers posing as economists.

It's a fun intersection of cyber and the dark forest but also

a cautionary tale about anonymity and doing counterculture (or just plain evil) things. I don't know why, when I read this article, I instantly thought of

KO's tales of drama from neuroscience academia.

"No referring sitemaps detected"

Let me briefly pivot to

a very specific technical issue I encountered with Google Search Console. This digression is pretty skippable but does fit in to the larger intent of this post. Google Search Console, by the way, is a tool Google provides to let you check if your site has been indexed (is available for search results). It also reports how often your pages or images show up in a search.

I've posted a few times about my mild fascination with

SEO (trying to get your website to the top of search results). I have no compelling interest in modifying my site content to be search engine-friendly, but I'm amenable to data shaping that might help guide searchers to any worthwhile information on my site. Most of all,

I'm occasionally entertained by the the perspectives of the SEO crowd. So when I saw the Search Console error message, "no referring site maps detected" attached to many of my pages, I indulged a little troubleshooting.

Google Support

There were a few Google Support requests matching my issue from February 2023. I should also note that around the same time I

experienced bulk de-indexing.

Jovanovic

Jovanovic |

I am trying to index the URLs from my website, While doing so I keep seeing this error in the Sitemap section "no referring sitemap detected" (as seen in the attached picture). Even though I have a correct sitemap submitted (as seen in the attachment), I keep getting this error. How can I fix this?

Please help

Thanks

|

I had been briefly

worried that my code had somehow fatfingered a url when it generated my sitemap (software is traditionally not susceptible to this physical world issue, but you never know). Nope, Search Console said it parsed the sitemap just fine and ctrl-f confirmed the urls were correct. So what gives? The well-lit forest of Google Support offered a hilarious answer:

JWP

JWP |

Hi Vid

There is nothing to solve here.

The page has been discovered, so Google knows about it. In 99.9% of all case going forward, it'll then just swing by and re-crawl it once in a while (with no reference to the sitemap).

There is a common misconception, that sitemaps are really important [to indexing]. That's not actually the case (certainly for smaller sites with good internal structures). The Google bot is a very capable spider and once it's gained entry to your site and assuming that the pages are inter-linked in a sensible way, it is perfectly capable of indexing the entire site, without any sitemap.

|

This is exactly how I picture Apple community support. Kind of like Stack Overflow's traditional, "but why do you want to do this?" but with a very heavy dose of brand loyalty.

"Ignore the erroneous information, it doesn't matter because Google is like really good at internet."

And

it wasn't a one-off, another response to a different support request about the same issue:

Gupta

Gupta |

Hello Skinly Aesthetics,

Googlebot found this page from another page and indexed it before crawling the sitemap file. But don't worry because this is not an issue; the purpose of sitemap files is also to help search engines in the discoverability of the pages.

|

That's probably not true for Skinly Aesthetics and it's certainly not true in my case -

my sitemap and most of my urls have been around for years. The weird deference to the platform was pretty offputting, does no one care about inaccurate data? Whether or not a random walk found Skinly's page first, doing a map lookup for that url is not compute-intensive.

barryhunter

barryhunter |

In practice Google only notes what sitemap(s) the is in when it actually crawls.

So 'URL is unknown' doesn't ever show sitemap (nor referring page!) details.

Until a crawl is actually at least attempted, won't get any details.

|

Nope. Navigating to an indexed page via the sitemap listing still reports this issue.

/r/seo

The next search result was,

some say, the place I should have started. While I found /r/seo's answer to be satisfactory, it was far from definitive.

To paraphrase their answer, "Google Search Console is notoriously unreliable."

Oh, it's just unreliable. And notorious for it. He wouldn't say it was notorious unless there was some established consensus on the matter. There could be dozens of people who feel this way, hundreds even.

Anyway, it's a better answer than, "I'm sure everything is fine why do you even care anyway lol?".

The UI answer

The Search Console report item, in its entirely, looks like:

Discovery

Sitemaps No referring sitemaps detected

Referring page None detected

URL might be known from other sources that are currently not reported

The fact that this seemingly erroenous information is listed in the 'discovery' section seems to agree with Gupta from above; the url was pulled from some database that doesn't reveal its origination information. That's reasonable enough, though listing each of the possible discovery methods when only one is valid fits neither traditional design norms nor Google's minimalist aesthetic. More importantly,

the unused discovery result should be labeled, "this method lost the race to this url" rather than "we didn't detect anything using this method".

Finding answers entertainment on the internet

Google's top results for my issue linked to several February 2023 threads on their support forum. That's theoretically good, except that the answers were all non-answers from "Diamond-Tier Lackey Contributors" and then the threads were locked. And

I never did get a definitive answer, though I didn't look too far down the search results. And that's for three reasons:

- I didn't care all that much.

- I've learned not to look past result #3 with SEO questions because it's either autogenerated nonsense or paywalled.

- I got distracted by catching up with /r/seo.

DaveMcG DaveMcG |

I am amazed by the number on posts on r/SEO where the OP doesn't even use google to answer their own quesitons first.

|

I did a double take, sprayed my Mountain Dew Code Red everywhere, and had to check the url. Yep, it was a real question. The responses were good:

|

/u/tmac_79

Everyone knows you can't trust search engine results... people manipulate those.

|

|

/u/mmmbopdoombop

ironically, sometimes the best advice is doing a Google search for your question followed by 'reddit'. We SEOs killed the regular results.

|

SEO, blogs, and the topography of the realnet

DrJigsaw DrJigsaw |

There is a TON of outdated info about link-building on the net.

Here's what DOESN'T work these days:

- Forum link-building. Most forums no-follow all outgoing backlinks.

- Web 2.0 links. People spamming their links on Reddit are 100% wasting their time. Google can tell a user-generated content site apart from all other sites. Hence, links from Reddit, Medium, etc. are devalued big-time.

- Blog comment links. Most blogs no-follow blog comment links, so that's a waste of time too.

- PBNs (ish). Well-built PBNs work just fine. The PBNs you bought from some sketchy forum, though, will crash your site big time.

So what DOES work?

Real links from real, topically related websites.

E.g. if you run a fitness site, you'd benefit from getting links from the following sites:

- Authoritative fitness blog/media

- Small-time yoga blog

- Weight loss blog/media/site

|

I don't know how true this information is, but for the moment I'll proceed as if it's 90% accurate.

I was surprised to see blogs come up on a post about SEO. Sure there are some popular bloggers, but it's weird to me that something like "Britney's Yoga Blog" would elevate a page more than even the smallest of mentions on one of the popular 2.0 platforms. To be quite honest, I'm not sure links from Britney's Yoga Blog should be all that influential to search ranking.

The other way to look at it: if the internet is just social media, corp pages, and blogs/personal sites,

blogs are the only places where backlink gains can be made. If you're doing SEO and the search engines have stopped ranking social media, you're unlikely to talk JPMorgan or Ars Technica into backlinking you, so you're left with the amateurweb.

This feels simultaneously cynical and hopeful for the indieweb.

Reddit, AI training data, and monetization

|

|

|

Reddit decided on an out of cycle return of /r/place, their crowdsourced graffiti wall. It went as expected. |

I read

an opinion last month that

Reddit's controversial API price hike was about repricing their data for the deep pockets of Big AI. I appreciated the new perspective but didn't personally find the argument very compelling.

The simple explanation seemed far more likely and far more on-brand. There may yet be an AI twist, brought about by yet another uncreative attempt by Spez to game Reddit's valuation. From an admin announcement:

|

/u/Substantial_Item_828

> In the coming months, we'll be sharing more about a new direction for awarding that allows redditors to empower one another and create more meaningful ways to reward high-quality contributions on Reddit.

Sounds ominous.

|

|

|

/u/Moggehh

It already broke in the APK notes. They're adding tipping for US redditors.

|

The APK notes, in part:

|

Fake internet points are finally worth something!

Now redditors can earn real money for their contributions to the Reddit community, based on the karma and gold they've been given.

How it works:

* Redditors give gold to posts, comments, or other contributions they think are really worth something.

* Eligible contributors that earn enough karma and gold can cash out their earnings for real money.

* Contributors apply to the program to see if they're eligible.

* Top contributors make top dollar. The more karma and gold contributors earn, the more money they can receive.

|

Incentivizing popular content creation sounds like a strategy to overcome the post-blackout brain drain while creating a new revenue source. On the other hand,

creating an influencer class to replace the powermod class might accelerate the platform's downfall.

The horse and cart may be reversed here. Perhaps Reddit was long planning to introduce this tipping system but

first needed to cut off API access so not everyone could create an LLM-trained money farmer. Scraping and data mirrors would still be viable, but not as easy as a pushshift query.

Reddit might also create a few bots of their own. Having Redditor-surrogate LLM bots would (in their mind) increase engagement and net them tips that don't have to be shared with flesh and blood users. It wouldn't be unprecedented. Something that came up in API controversy (but was largely eclipsed) was that

Reddit had quietly been translating popular English-language content to other languages and regions. When asked by moderators, the admins said, "hey this is popular and we thought we'd see if it was of interest to your community". It's not strictly a bad idea but bore all the hallmarks of

a revenue-motivated engagement experiment.

Speaking of in-house AI, X

NBC News

NBC News |

On Sunday, Twitter CEO Linda Yaccarino said the branding change will introduce a major pivot for the microblogging platform, which she said will become a marketplace for "goods, services, and opportunities" powered by artificial intelligence.

"It's an exceptionally rare thing - in life or in business - that you get a second chance to make another big impression," the chief tweeted. "Twitter made one massive impression and changed the way we communicate. Now, X will go further, transforming the global town square."

|

Welp,

he said he was creating a WeChat knockoff (er, "X, the everything app")

back in October. It's just kind of funny that the bird logo was replaced on a Sunday afternoon but everything else still said Twitter/tweet/etc.

PaulHoule

PaulHoule |

The next question is: "Is he really serious about the super app?". The horror is that he probably is, but what business wants to deal with a mercurial leader who might stop payments, pay people extra, or impound money in your account for no good reason. What business is going to want to put an "X" logo up by their cash register when it means they are going to have arguments with customers. (I bet it will be a hit for "go anti-woke and go broke" businesses though.)

|

I, for one, can't wait to contact Xpay customer service about fraudulent activity on my Xaccount only to receive a poop emoji reply.

heyjamesknight

heyjamesknight |

I've been mostly ambivalent about the Musk-era at Twitter-mostly because I just don't care enough to have an opinion.

This, though. This one makes me angry and disappointed.

Twitter has had such a solid brand for so long. It's accomplished things most marketers only dream of: getting a verb like "Tweet" into the standard lexicon is like the pinnacle of branding. Even with all of the issues, "Twitter" and its "Tweets" have been at the core of international discourse for a decade now.

Throwing all of that away so Elon can use a domain he's sat on since '99 seems exceedingly foolish.

|

Back to SEO

From a Hacker News link:

Izzy Miller

Izzy Miller |

Last week, I found a glitch in the matrix of SEO. For some reason, every month 2,400 people search for the exact string "a comprehensive ecosystem of open-source software for big data management".

And weirdly, there are ~1,000 results for the exact query "A comprehensive ecosystem of open-source software for big data management". This is at once a weirdly small and weirdly large number- small because most Google searches have tens of millions of results, but large because most Google searches for exact string matches of that length actually turn up few, or no results. So there's something to this phrase.

This means that thousands of students started searching for "a comprehensive ecosystem of open source software for big data management" every month as they studied for their final IoT exam. And the SEO analytics dashboards noticed.

The really interesting thing about this case though, is that the original source content driving this search interest is not publicly available or indexed. This query is copied and pasted verbatim from an exam, which are famously not something you want to be found on Google.

|

To summarize Miller's conclusion:

web marketers noticed that the test question search term was unexploited territory so they quickly spun up autogenerated pages for this highly specific phrase.

It seems that in addition to a dark forest

there is a well-illuminated forest full of plastic trees.

Izzy Miller

Izzy Miller |

This is only going to get weirder with LLMs, at least in the interim before everyone stops using Google. There's now a ton of tools that automatically find low competition keywords and generate hundreds of AI blog posts for you in just a few minutes.

The real problem is just the lack of alignment these articles have with search intent- if you want people to land on your site and remember you favorably, you should just answer their question. Everything else is extraneous. I don't have high hopes for AI accurately determining the intent of all the strange keyword combinations out there, and so I expect we'll see more and more of these glitches.

Perhaps, only 4chan can save us with their reputation for kindness and straight to the point questions and answers...

|

"Accurately determining the intent" and a Reddit bamboozle

Forbes

Forbes |

As someone who writes about video games for a living, I am deeply annoyed/terrified about the prospect of AI-run websites not necessarily replacing me, but doing things like at the very least, crowding me out of Google, given that Google does not seem to care whatsoever whether content is AI-generated or not.

That's why it's refreshing to see a little bit of justice dished out in a very funny way from a gaming community. The World of Warcraft subreddit recently realized that a website, zleague.gg (I am not linking to it), which runs a blog attached to some of sort of gaming app which is its main business, has been scraping reddit threads, feeding them through an AI and summarizing them with "key takeaways" and regurgitated paragraphs that all follow the same format. It's gross, and yet it generates an article long enough with enough keywords to show up on Google.

Well, the redditors got annoyed and decided to mess with the bots. On r/WoW, they made a lengthy thread discussing the arrival of Glorbo in the game, a new feature that, as you may be able to guess from the name, is not real.

|

Never thought I'd lol side by side with a Blizzard fan.

The Portal

The Portal |

Reddit user malsomnus hails it as the best change since the quest to depose Quackion, the Aspect of Ducks.

|

Moment of zen

Blogroulette sent me to this awesome post about a game I now want to play:

merritt k

merritt k |

Explaining All of the Fake Games From Wayne's World on the SNES

Or maybe you instead decide to create an elaborate framing story where Wayne and Garth are talking about terrible games they've been playing at Noah's Arcade, the company that sponsors their show in the film. That's what Gray Matter did back in 1993, opening the game with some pixelated approximations of Mike Myers and Dana Carvey listing some titles with hacky joke names in a bit that somehow sets up the entire bizarre adventure.

It's kind of a bold move to open a terrible game with a list of fictional terrible games, but what exactly were these titles supposed to be? Here are my best guesses.

|

We flew up to Washington to visit

Jon and

Tori and Jack and Pepper.

The flight was almost completely uneventful.

Leaving in the evening was a refreshing change from all of our recent early departures.

Since our last Seattle

visit to dogsit Pepper,

Jon and

Tori added chickens, Rody, and also Jack.

On the drive up we stopped at

Snoqualmie Falls. The overlook was neat but it was even better to hike down and get our feet/paws wet.

The cabin was spacious and well-appointed, situated halfway between the lake and the tiny town of Ronald (next to the slightly larger town of Roslyn).

Cle Elum lake was scenic but for our sole 2pm visit it was subject to a constant driving wind. Danielle,

Jon, and Pepper didn't mind, they happily got in the water where the wind wasn't espcially windy. Before we left we got an impressive Chinook flyover.

Wind quote of the day went to:

Danielle Danielle |

I'm eating air!

|

After

two nights, a trip to Dru Bru brewery, and one bear, we headed back to the city, stopping briefly at Gold Creek Pond. This water was clearer and colder than Cle Elum, so only Pepper went in. We got an F-18 flyover that was rather loud.

|

|

|

I didn't put in enough effort to make either a good graphic or an ironic napkin graphic. |

I implemented a new feature for this site,

the footer of each new post is going to have three of these guys:

Minor detail: I don't show them in full-month view to keep things a bit cleaner. Autogenerating sneak peeks of other posts felt kind of dirty since that's what all the commercialized sites do, but it helps with navigating similar subjects.

You may have noticed that I just mentioned a feature but my post title is "Feature request". We've now arrived at that part.

I want to do the post preview thing I just mentioned, but with other websites, especially from the so-called indieweb.

Rule 34 of internet development says

this thing already exists and that's probably true, but I haven't found it. The closest analogue: a lot of sites provide links to content "around the web" that are just advertisements with context-adapted titles. I want this notional feature, but I want the other end of the href to be real content that is not selling anything. Two more asks:

- I don't want to get paid for linking to someone else's site.

- I don't want to have to discover the pages I'm linking to. A database should do that.

Architecture

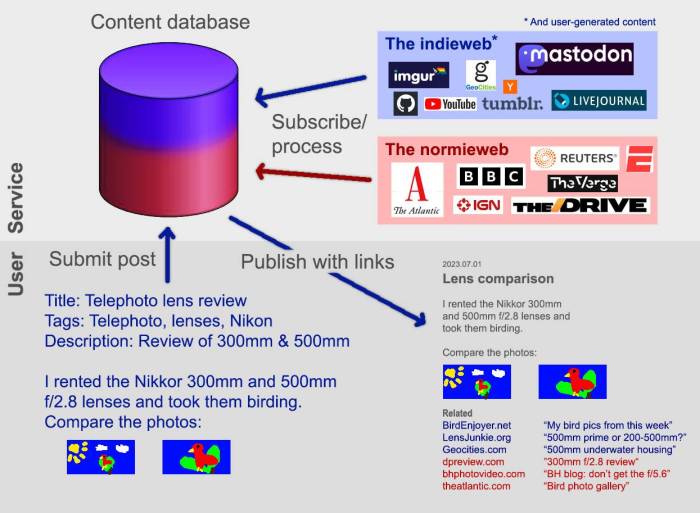

Referring to the diagram above, the bottom half shows what I want to integrate into my publishing process.

I submit my post information in one or more standards that already exist:

- RSS

- Page metadata tags

- Page text blob

The

service gives me a few links to put at the bottom of my post that are timely and relevant (more on this later).

The top half of the diagram shows the service: a database of content aggregated via user submissions (e.g.

indieblog.page) and subscriptions (e.g. RSS). In the diagram there are

two partitions: community content and mainstream content. The latter isn't critical to this design, but when I was thinking about Reddit replacements and how accessible commercial feeds are, adding Associated Press links is a short putt. Similarly, with my recent geopoliticsposting, anyone that survives to the end of the post might want to see what legitimate information sources have to say.

As a publisher, I want:

- A simple mechanism to submit my post/feed to the big database and instantly get links I can add to my post.

- Link metadata (title, thumbnail, description, tags) in a generic format that I can present in my site's visual style.

- To have incoming links that point to the relevant content. If someone lands here while browsing garage floor surfacing ideas, I don't want them to be bothered by my 3,000 word review of the Superman 64 re-release.

As a reader, I want:

- Relevant links to the material I'm reading.

- Recent links, though in certain cases I prefer relevance to recency.

- Good content, not something autogenerated or overly terse or above/below my technical level.

That's it

It really is. It's a basic database, some undemanding subscription/discovery services, and some simple clientside html to have the whole thing going soup to nuts.

Everything south of this sentence is just expanding on the 'why' and the 'how'.

SWOT

I was going to use this ironically but then ended up following the format.

Strengths

- It doesn't need to be comprehensive to be useful. Getting feeds from 20% of the indieweb and 10% of mainstream media is sufficient to provide really good link material.

- It's self-sustaining. Submitting a page to gather recommended links organically grows the database.

- It's simple for developers. Post xml, get xml, append to post.

- It's mutualistic. It addresses the indieweb discoverability issue and is preferable to spamming links and other dirty SEO tricks. It even uplifts the entire indieweb since external references are one of the main metrics for search rank.

- It doesn't require mass adoption to work. The database can be built and grown with one subscriber or a million subscribers. The link quality is based entirely on the database and recommendation algorithm.

Weaknesses

- It doesn't facilitate discussion. It's not meant to, but if we're talking about the post-Reddit world, it's worth noting that this is closer to Reddit's link aggregator role than its discussion role.

Opportunities

- Search. The database could, of course, be linked to a search page.

- Link quality ratings. I mentioned link selection based on similarity and timeliness, there would certainly be a benefit to user-provided link scoring (active or passive).

Threats

- Garbage content/spam. Anything popular on the internet is a magnet for opportunists. Luckily we have opaque algorithms and shadowbanning.

The bigger picture and Web 1.1

nunez

nunez |

[Twitter putting up a login wall] killed nitter.

Fuck.

I guess I'm done with Twitter.

Reddit is in Eternal September. Twitter is login-walled. If HN is next, I'll probably be mostly done with the Internet.

This version of the Internet is starting to suck. :(

|

"Let's go back to the old web" is a common refrain amongst geeks and people burned out on social media, engagement capture, SEO, influencers, astroturfers and all the other commercial nonsense. We're never going to actually go back though,

the best we can do is branch and hope the old-new thing is better than the current thing.

Recapping the internet, from 14.4 to present day:

- Before September: bulletin boards and other stuff before my time.

- The old web: portals, blogs, forums, under construction gifs.

- Web2.0: social media. It briefly showed the power of the internet to connect people but quickly turned into walled gardens.

- Web3: like VR or something? It was a buzzword for a little while.

I've rolled the indiepage die more than a few times and can tell you that

the blogosphere isn't all gold. Some notable issues:

- There are way too many web developers posting javascript. That's both strictly true and my euphemistic way to say that if I threw a dart at the blog board, I'd hit something that interests me only occasionally.

- Blogs, Discord/YouTube/etc channels, and the indieweb are sparse sources of information. They're like reading random articles in a newspaper rather than the front page.

- It's impossible to navigate the indieweb. Posts sometimes have links, sites sometimes have a link page. That's it.

- From the writer's perspective, discoverability amounts to spamming backlinks rather than letting content quality guide users to you. Site note: I don't spam backlinks, but that's what people have to do.

Last year I read a post about the indieweb being a

dark forest;

its issues are visibility and navigation. Quality and variety aren't an issue. LinkedIn hasn't gobbled up all of the off-hours posting by credible professionals. Hacker News is like 25% links to blogs or niche sites where people know what they're talking about. Just yesterday after a few blogroulette spins I saw an amazing, tongue-in-cheek, disappointed

review of Far Cry 4 because it was

a ten minute walking simulator. Many such cases!

Since this post started with the solution and ended with the problem, I needn't now recap why my humble feature request solves

the dart board problem, the linking problem, and the discoverability problem. Fundamentally, the internet is about navigation and connections, the old web just lost some of that when Tom and Zuck took over.

There's a saying on WallStreetBets, "the real DD (due diligence) is in the comments". It's a half-truth, reading replies absolutely is not financial due diligence and plenty of commenters are, uh, not successful investors. On the other hand,

the comment section is where the critical thinking lives (because OP probably didn't analyze his own post objectively).

This theoretically applies everywhere, but as Reddit slides into

'enshittification' I'll talk about why that particular site was so successful.

Cunningham's Law

"The best way to get the right answer on the internet is not to ask a question; it's to post the wrong answer." That is,

people on the internet don't want to be helpful, they want to be right. That's not always a recipe for civility (see also Godwin's Law) but it can be a recipe for finding answers. StackOverflow has long been the go-to resource for coders, if you look past the initial question it's all people wanting to be rightest and smartest.

Returning to Cunningham's Law,

if OP's post is bad, the comments will illustrate why that is the case. If OP's post is good, someone will say "ackshually..." but promptly be proven wrong. And I'm using right/wrong out of convenience, many things aren't so polar but the principal still applies.

Reddit and Google and SEO

|

/u/Leshawkcomics

Reddit is one of the last few places you can get information off google.

You ask google "How do i handle X" and it will sell you 20 sponsored answers and adverts that don't actually solve your issue.

You ask google "How do i handle X 'REDDIT'" and it will show you 20 reddit threads with people who had your exact same issue and many of which have answers you didn't know you needed.

Doesn't matter if its daily life, community stuff, gaming tips, cooking, cleaning, frugality etc.

You get actual answers from people and not buzzfeed articles or pintrest posts or advertisements.

|

|

|

/u/MontyAtWork

Do you want to know how to fix your PC graphics card? Many people have complex issues from their personal computing but it's not as complicated as it seems. To understand how to fix your PC graphics card, you first need to understand a few basics. A PC stands for personal computer and can have multiple components, including a PC graphics card. When users need help fixing their PC graphics cards, it can be a costly replacement to have someone else do for you, but it can easily be done by yourself. By understanding how a PC graphics card works, you can start troubleshooting issues with your PC graphics card right away, so you can quickly return to using your PC graphics card for business or personal use. When trying to fix your PC graphics card, one should consult a user manual before attempting to fix your PC graphics card. This can help keep you safe when trying to fix your PC graphics card as components can break if you are not careful. By not following the directions in the user manual of your PC graphics card...

|

This phenomenon exists in part because SEO destroyed search and in part because Reddit threads have good information. I don't want to focus on Reddit too much, but having been part of a few internet communities in my time, it was the most successful discussion forum. Here's why:

- Volume/accessibility: Reddit was a large site that could be viewed without a login and, until recently, posted to without even providing an email.

- Voting: Reddit's accessibility could have been a dangerous flood of information were it not for the voting system (and moderation). It isn't perfect, in-jokes and reused content get a lot of visibility.

- Enthusiast-oriented: Reddit was designed around enthusiast communities (politics, quilting, trees, etc.). You're more likely to get a good answer about solar in a solar community than a Mincraft Discord. Again it's not perfect, if you ask an Apple community how to access your phone's filesystem you'll most likely get, "Why would you want to do that? Just use icloud."

- Reputation: while Reddit supported throwaway accounts and nuking your identity, it also fostered committment and people concerned about their credibility.

- Threaded view and information density: olden-day forums were single-thread. If someone posted a cat pic, you could have a half-dozen dog pics before you could reply "nice cat pic". The Reddit comments section used threading, so the context for a comment was always easy to track. This is especially important with adversarial (but constructive) discourse.

- Sauce: Reddit supported quotes and hyperlinks, so commenters could ask for or provide attribution.

Each of these contributed to the success of Reddit as a discussion forum, looking at other sites its easy to spot the gap. 4chan is, well, a bit chaotic - threading/replies aren't especially intuitive and the lack of voting means you see everything. Hacker News is more or less a clone of Reddit but it lacks user volume and content diversity to be a web phenomenon. News sites, blogs, enthusiast forums, and YouTube often have comments sections that are deficient in two or three of the categories mentioned above.

There's another site that failed to duplicate Reddit's success, it's called Reddit. Years ago, Reddit redesigned their main site to maximize ad visibility and engagement. The new design lacked the thread visibility and information density described above and was largely the reason for their recent third-party app drama.

|

|

|

Since old Reddit was left to die, inline images were never implemented. |

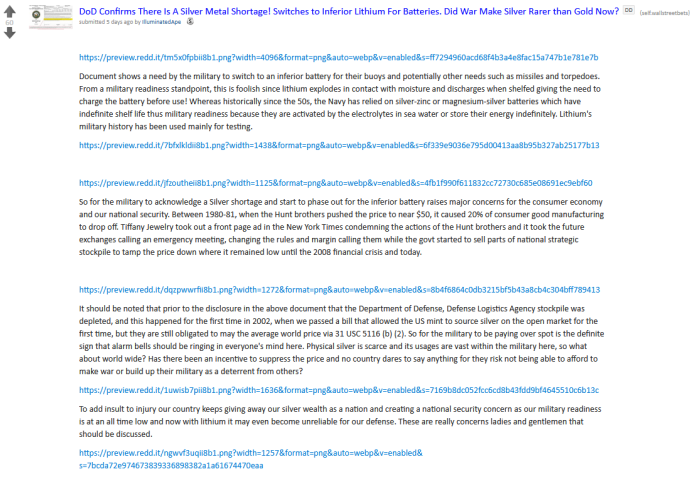

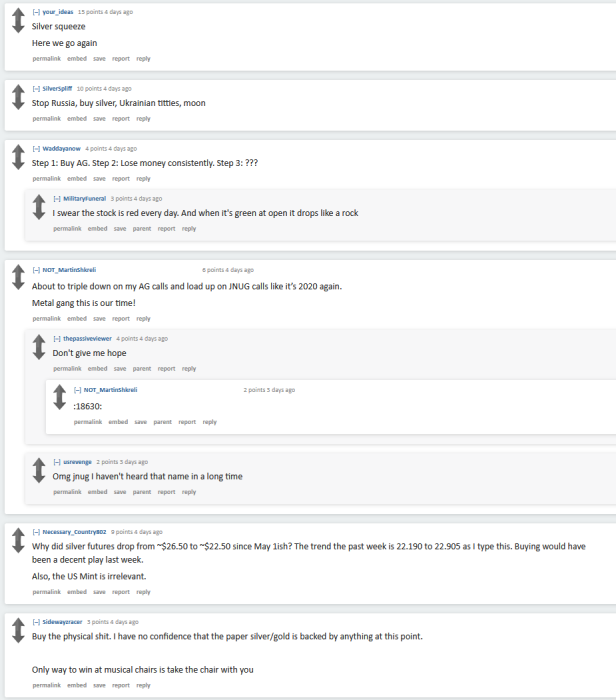

Here's a trash post in WallStreetBets. The 'silver squeeze' has been a dumb pump and dump since the Gamestop saga brought an influx of astroturfers.

Democracy wins, OP is laughed at.

New Reddit. Just lmao.

The bottom line

I've found myself revisiting the phrase "the real DD is in the comments" in a variety of context, so it made sense to provide the full explanation.